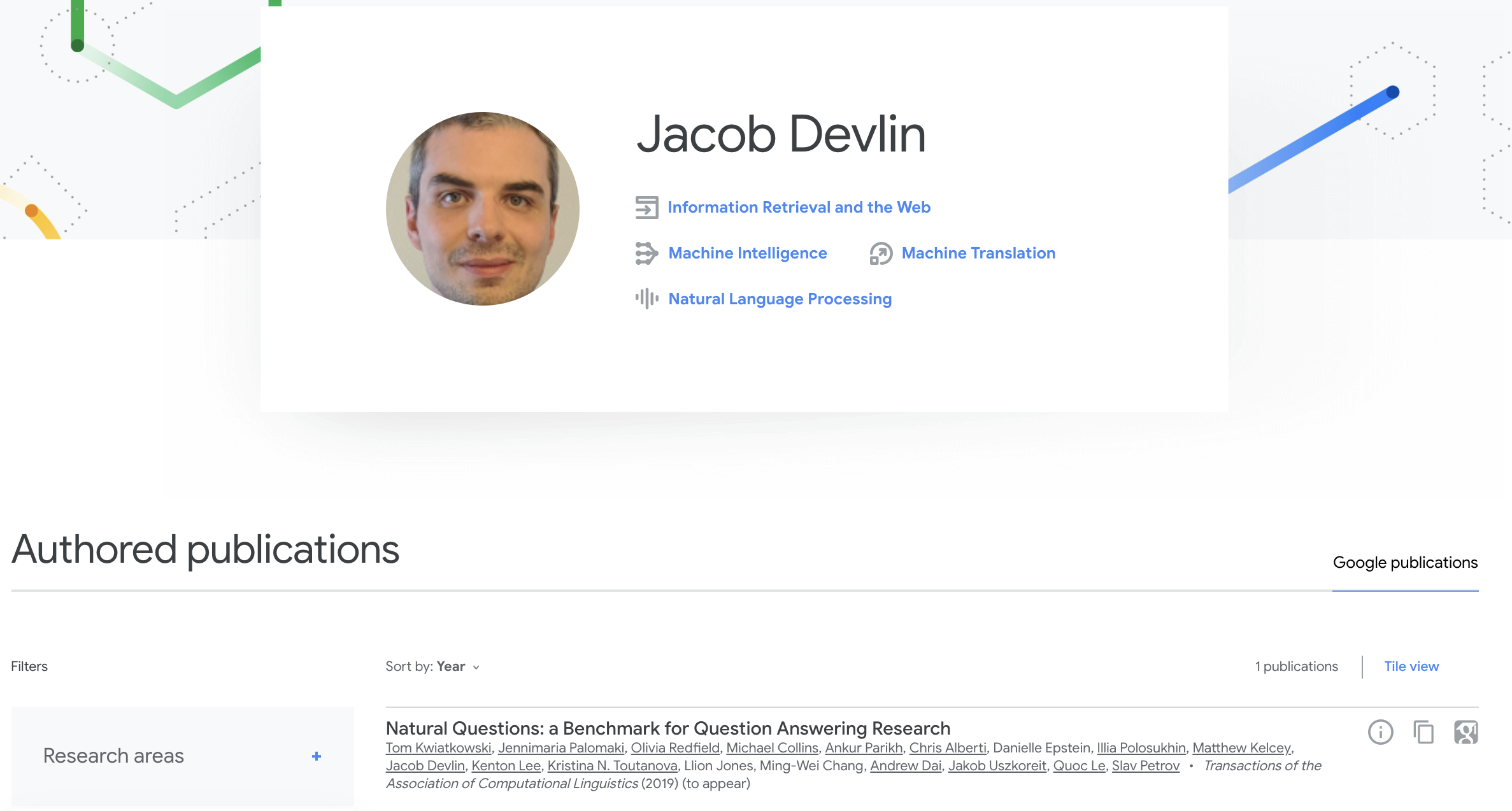

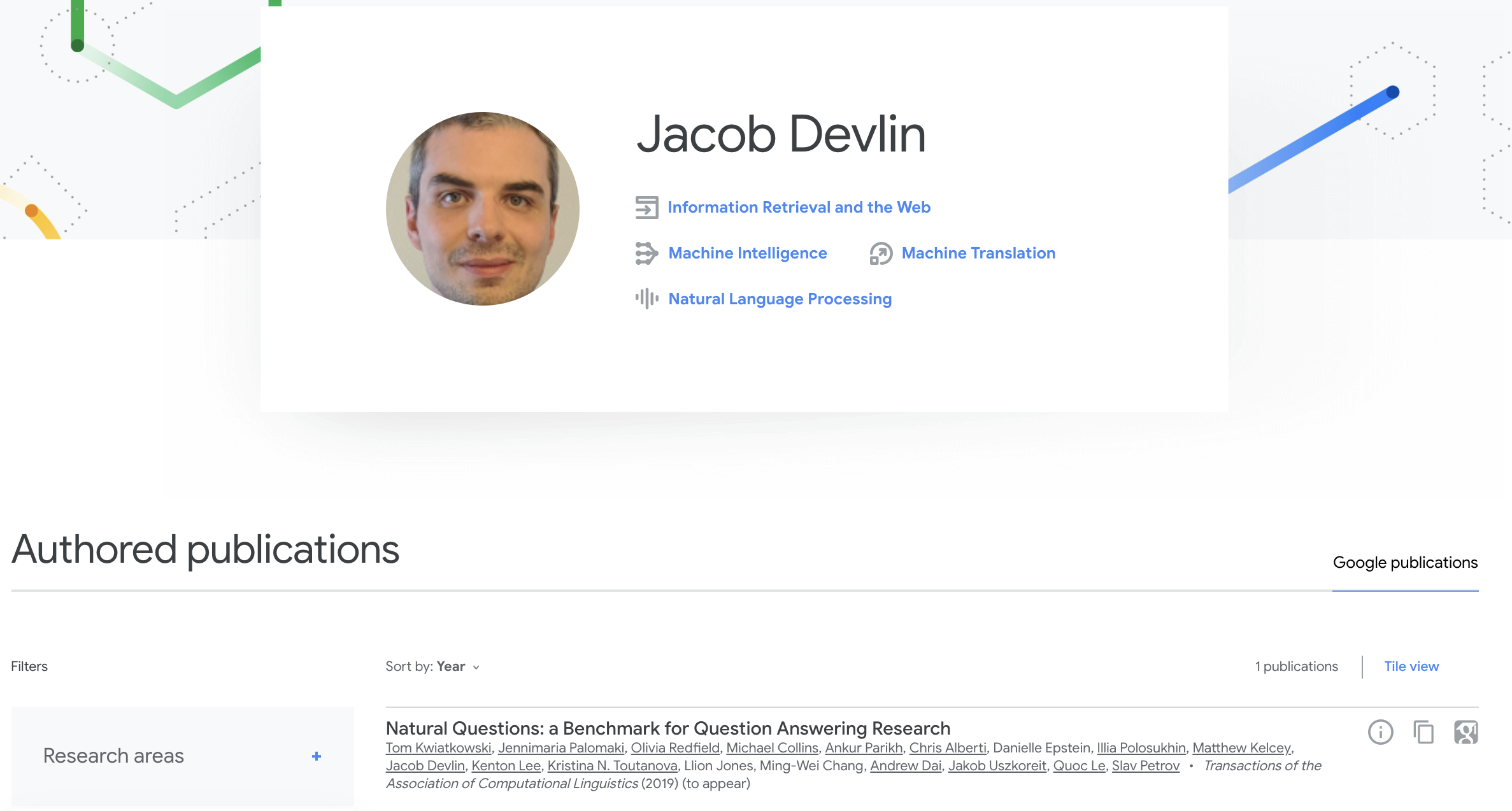

Author

- 저자:Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova (Google AI Language,

Google AI니 말다했지)

Who is an Author?

Jacob Devlin is a Senior Research Scientist at Google. At Google, his primary research interest is developing fast, powerful, and scalable deep learning models for information retrieval, question answering, and other language understanding tasks. From 2014 to 2017, he worked as a Principle Research Scientist at Microsoft Research, where he led Microsoft Translate’s transition from phrase-based translation to neural machine translation (NMT). He also developed state-of-the-art on-device models for mobile NMT. Mr. Devlin was the recipient of the ACL 2014 Best Long Paper award and the NAACL 2012 Best Short Paper award. He received his Master’s in Computer Science from the University of Maryland in 2009, advised by Dr. Bonnie Dorr.

{: height=”50%” width=”50%”}

{: height=”50%” width=”50%”}

느낀점

- Masking 기반의 Language Model과 context 추출을 위한 문장 연관성 (NSP) Task를 동시에 학습시켜서 Rich representation을 얻는다는 아이디어가 참신했음. 두마리 토끼를 한번에..!

- Bidirectional feature가 상당히 중요함

- pre-train 중요함

- NSP도 매우 중요함

- 여기서도 Loss Masking이 중요함

- CLS Loss와 LM Loss를 따로 떼서 계산해야함

- gelu, masking scheme 썼을때와 안썼을때 성능차이가 꽤 남

- segment embedding 처리하는게 은근 귀찮음, 전처리 할때 아예 생성해버리는게 편하긴함

- CLS acc 올리기보다 LM acc 올리는게 더 쉬움

{: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”}