RoBERTa: A Robustly Optimized BERT Pretraining Approach

Author

- 저자: Yinhan Liu∗§ Myle Ott∗§ Naman Goyal∗§ Jingfei Du∗§ Mandar Joshi† Danqi Chen§ Omer Levy§ Mike Lewis§ Luke Zettlemoyer†§ Veselin Stoyanov§

- † Paul G. Allen School of Computer Science & Engineering, University of Washington, Seattle, WA

- § Facebook AI

느낀점

Abstract

- hyperparameter choices have significant impact on the final results

- carefully measures the impact of many key hyperparameters and training data size

- find that BERT was significantly undertrained, and can match or exceed the performance of every model published after it

Introduction

- We present a replication study of BERT pretraining (Devlin et al., 2019), which includes a careful evaluation of the effects of hyperparmeter tuning and training set size.

- modifications

- (1) training the model longer, with bigger batches, over more data;

- (2) removing the next sentence prediction objective;

- (3) training on longer sequences; and

- (4) dynamically changing the masking pattern applied to the training data.

- contributions

- (1) We present a set of important BERT design choices and training strategies and introduce alternatives that lead to better downstream task performance;

- (2) We use a novel dataset, CC-NEWS, and confirm that using more data for pretraining further improves performance on downstream tasks;

- (3) Our training improvements show that masked language model pretraining, under the right design choices, is competitive with all other recently published methods.

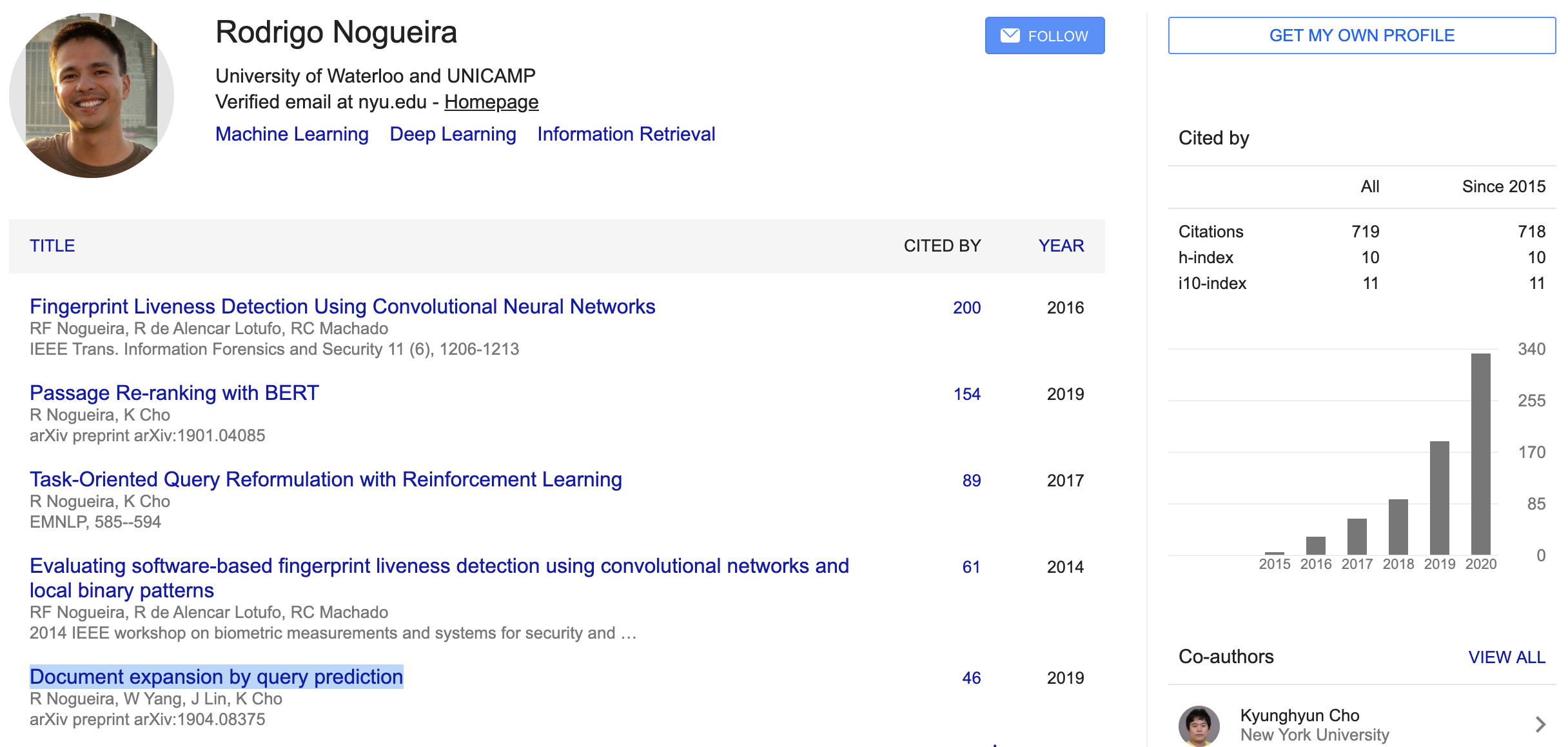

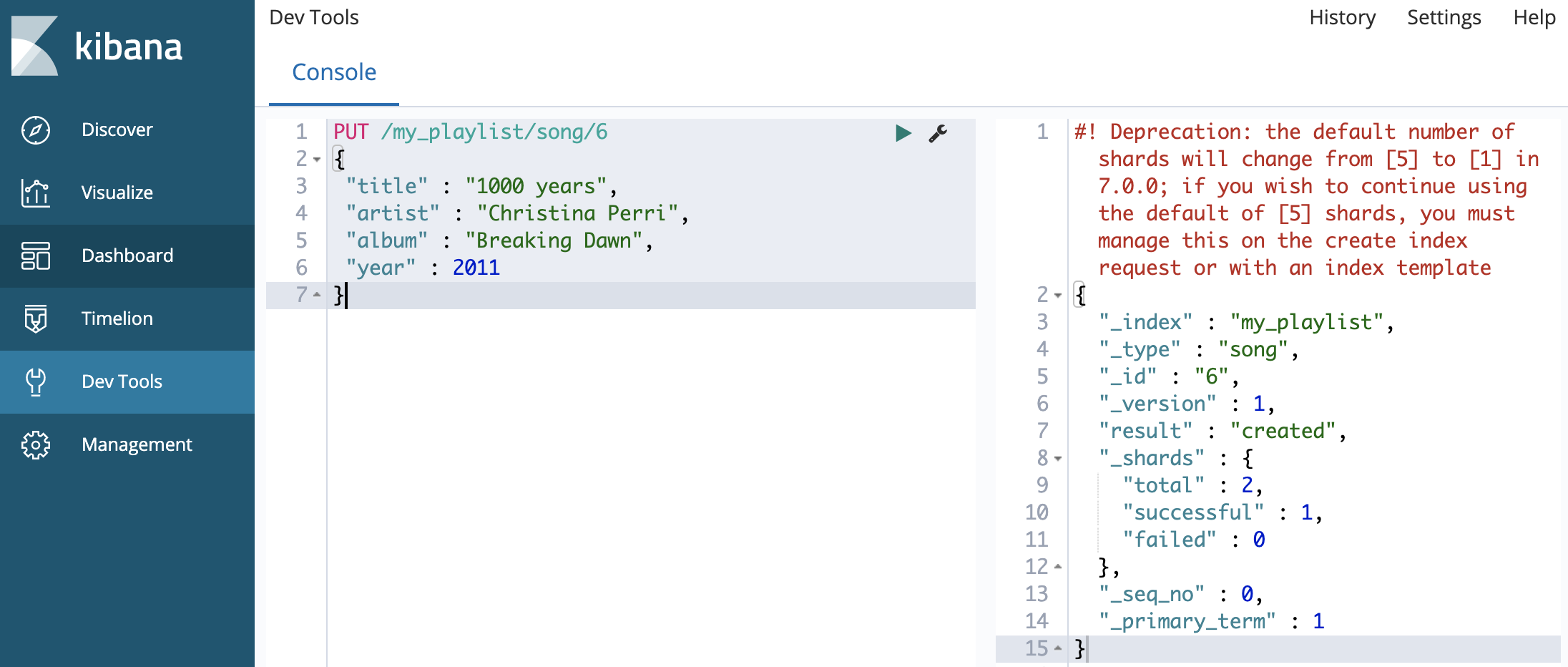

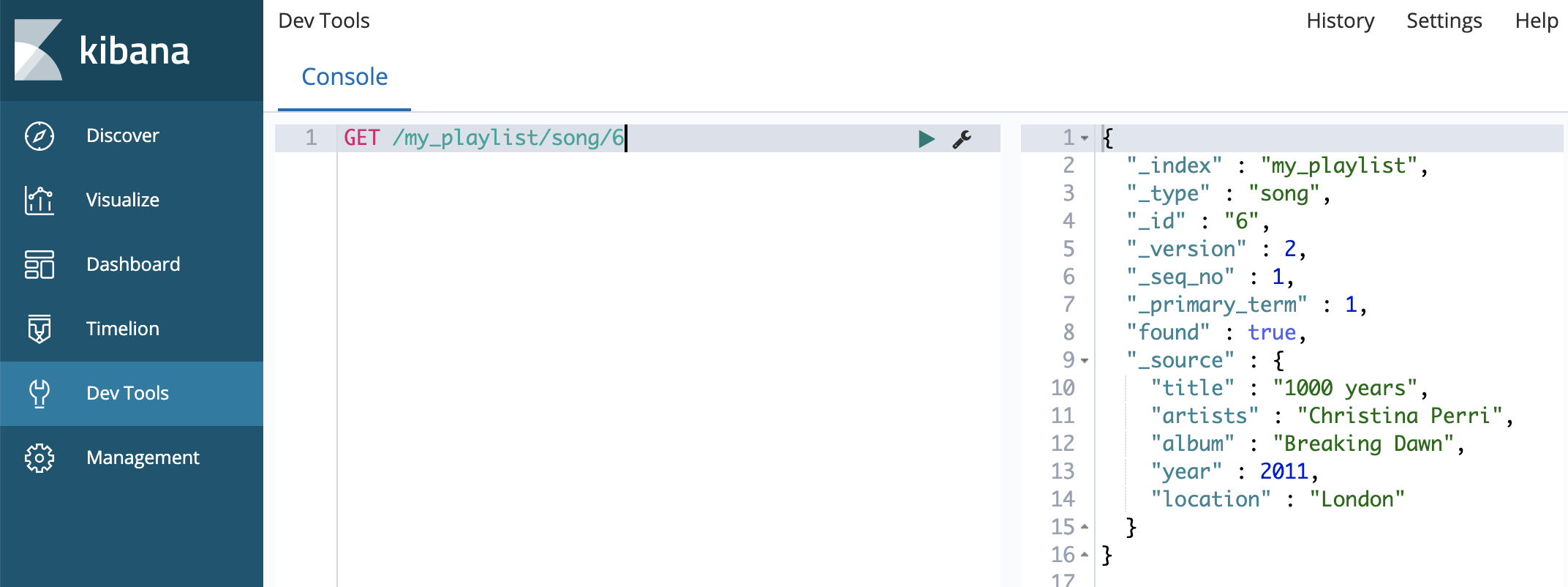

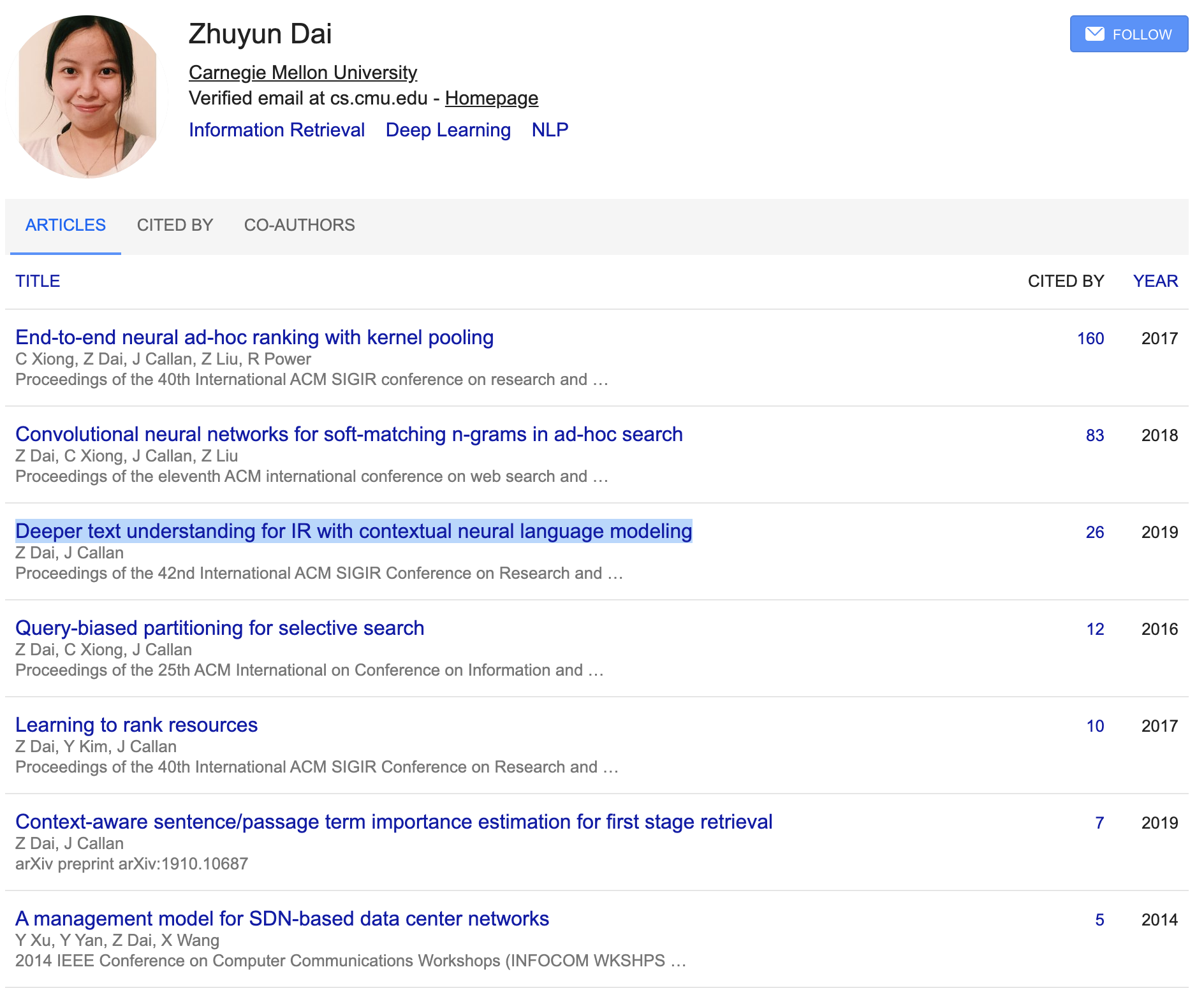

{: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”}  {: height=”30%” width=”30%”}

{: height=”30%” width=”30%”} {: height=”50%” width=”50%”}

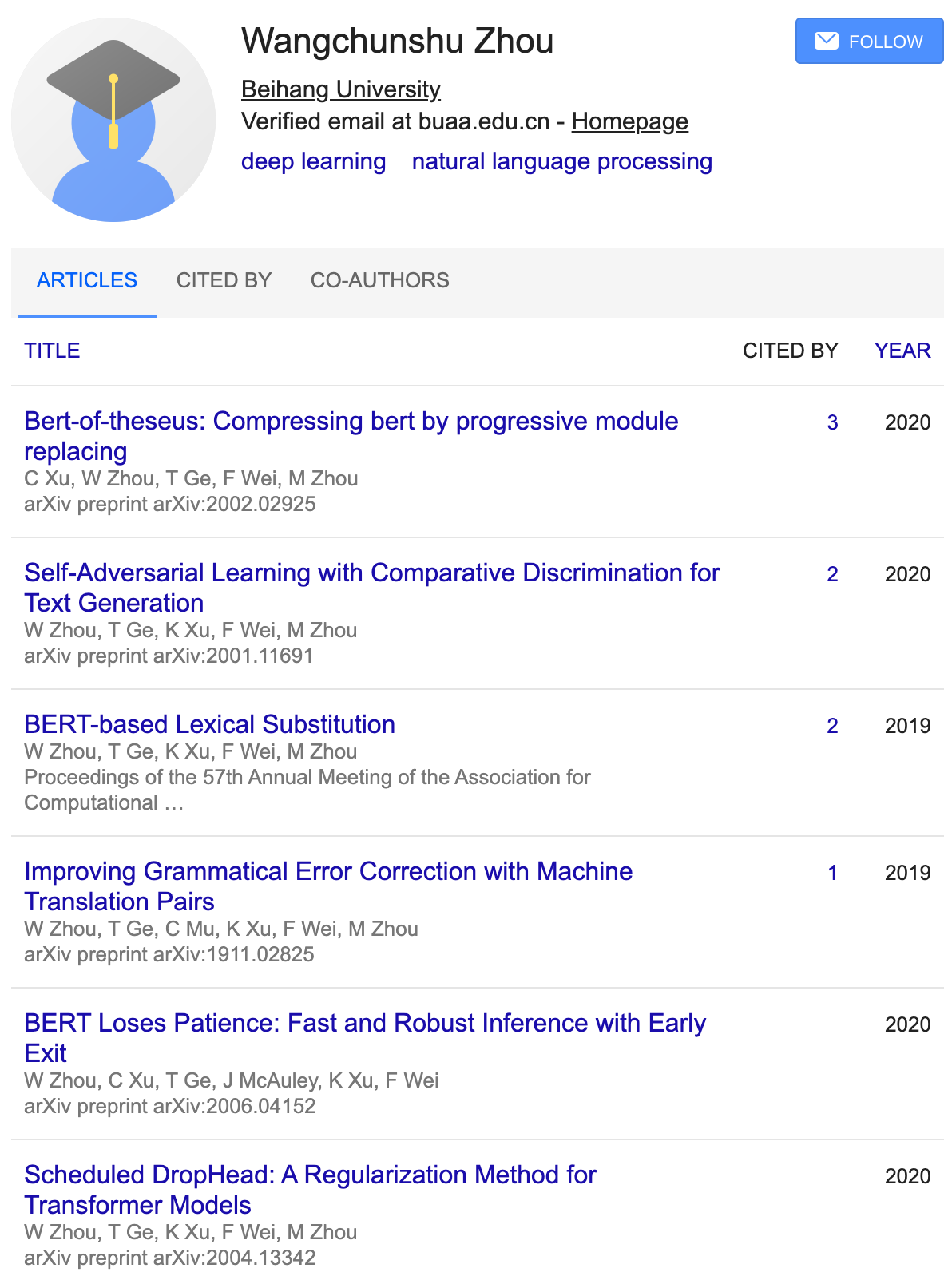

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

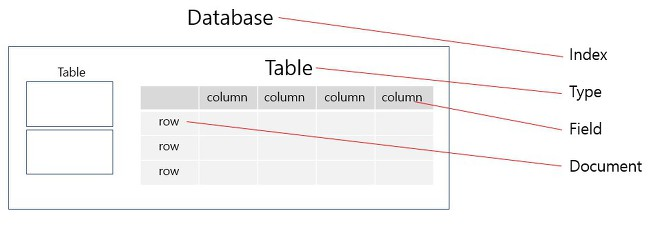

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

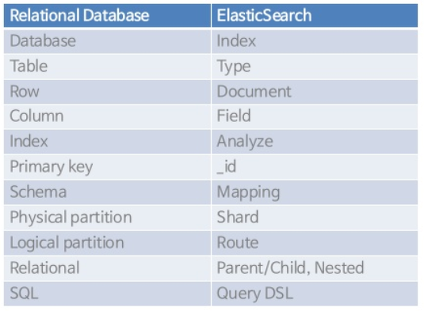

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”} {: height=”50%” width=”50%”}

{: height=”50%” width=”50%”}